The Evolution of Web Scraping in 2026: Ethics, Real-Time APIs, and Headless Strategies

In 2026 web scraping is no longer just scripts and proxies — it's about real-time collaboration, ethical data pipelines, and systems that play nice with modern protocols. This guide maps the advanced strategies you'll need now.

The Evolution of Web Scraping in 2026: Ethics, Real-Time APIs, and Headless Strategies

Hook: If you still think web scraping is about a single cron job and a few rotating IPs, it's time to catch up. By 2026 the practice has matured into a discipline that blends real-time API design, rigorous ethics, and resilient, low-latency delivery.

Why 2026 is different

Teams are building data products that rely on ethically sourced, near-real-time data feeds. From market intelligence to content aggregation and ML training sets, the tolerance for stale, brittle scrapers has collapsed. Today's challenges demand new approaches: distributed headless browsing, observability for data quality, and governance frameworks that satisfy both legal teams and platform operators.

"The difference this year is not just speed — it's accountability. Consumers, partners, and regulators expect traceability for every row of data."

Core trends shaping scraping in 2026

- Real-time scraping APIs: Instead of batch archives, teams expose streaming endpoints that supply validated deltas.

- Headless mesh architectures: Lightweight browser instances orchestrated across edge nodes reduce latency and avoid single-region throttles.

- Ethical verification: Signed data provenance and consent flags are integrated into pipelines.

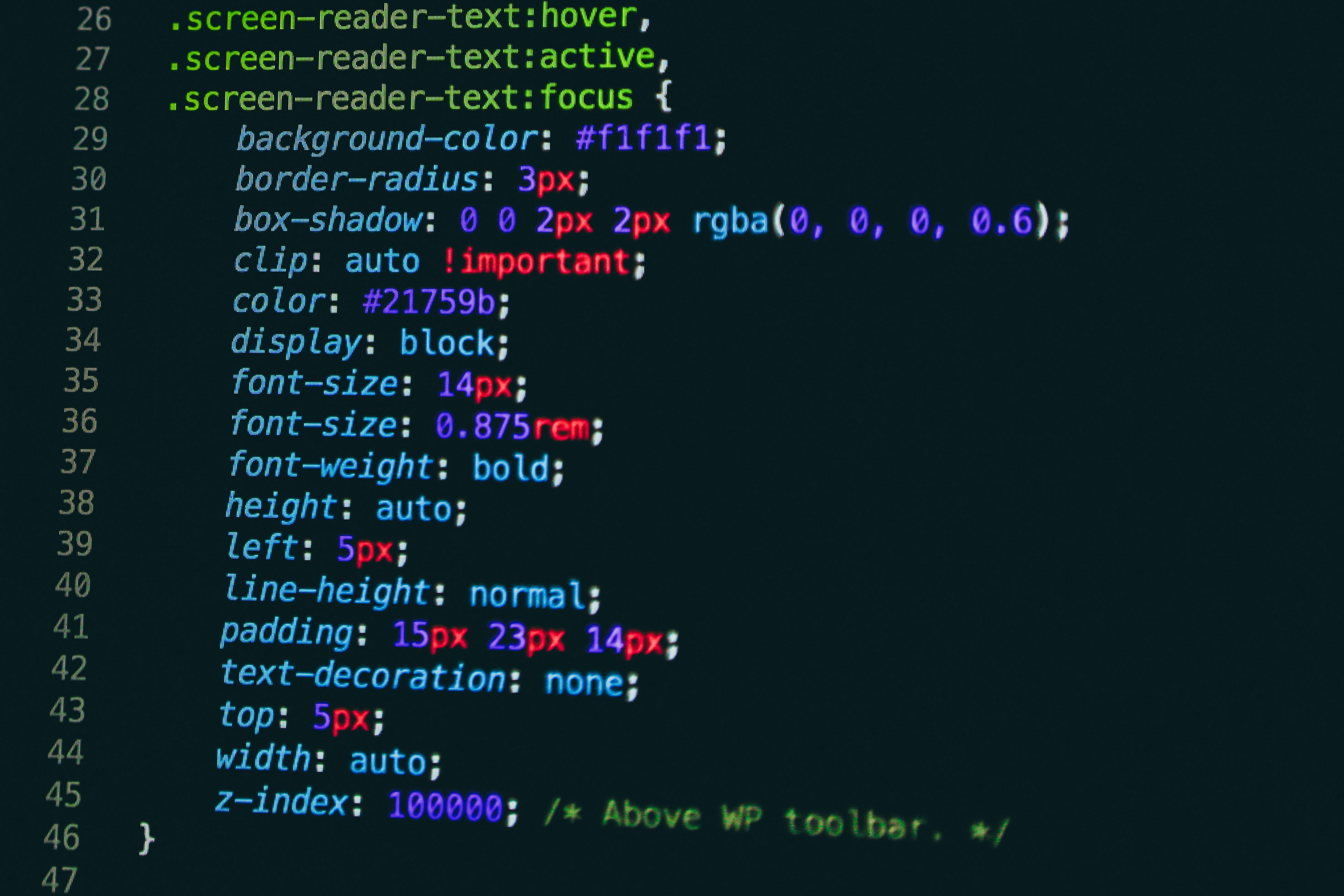

- Developer ergonomics: Prebuilt adapters for common frameworks and new ECMAScript features simplify DOM-driven logic (see the ECMAScript 2026 proposal roundup for features that change the game).

- Interoperability with privacy-forward APIs: Platforms exposing structured feeds are increasingly the first-class integration target.

Advanced strategies for resilient scraping

Below are field-tested tactics used by engineering teams in 2026 to keep feeds reliable and low-risk.

- Design for partial failure: Build pipelines that tolerate degraded fields with schema evolution and feature flags. Use circuit breakers and graceful degradation in downstream models.

- Implement signed provenance: Attach cryptographic evidence to ingested records showing which headless node fetched the page, what selector logic was used, and a fingerprint of the HTML snapshot so clients can audit changes.

- Leverage context-aware scheduling: Run heavier headless renders during off-peak windows for a target domain; prefer lightweight HTTP fetches with HTML parsing where possible.

- Observability and SLOs: Monitor content freshness, field-level accuracy, and time-to-fix. Tie SLOs back to business impact so engineers can prioritize remediation.

- Use domain-specific adapters: For critical partners, maintain an adapter that consumes their structured feed if available — this reduces scraping cost and compliance risk.

Tooling and language choices

Node.js remains popular for orchestration, but teams are mixing runtimes to optimize for cost and latency. New ECMAScript proposals in 2026 have added language features that simplify async DOM streaming and make pattern matching in parsers more robust — the ECMAScript 2026 proposal roundup is a useful reference when choosing toolchains.

Case study: From brittle spider to real-time feed

A mid-sized content aggregator converted a legacy cron-based scraper into a streaming API. They reduced end-to-end errors by 60% and shortened time-to-surface by 80% by combining these practices: deploying lightweight headless workers at the edge, instrumenting field-level checks, and handing content teams a dashboard that surfaced anomalies.

Cross-discipline lessons you should borrow

- Borrow editorial process habits: use a 30-day editorial blueprint to introduce small habits that drive quality improvements in data pipelines; the editorial playbook has strong parallels to QA for scrapers (Small Habits, Big Shifts for Editorial Teams).

- Collaborate with UX and calendar scheduling teams when scraping event pages — event planners often change markup close to launch; resources like "How to Plan an Event End-to-End Using Calendar.live" show how calendar-aware integrations can reduce breakage (How to Plan an Event End-to-End Using Calendar.live).

- If you aggregate creator commerce or merch data, study micro-run strategies; these limited drops cause bursty traffic patterns and require adaptive scheduling (Merch Micro‑Runs: How Top Creators Use Limited Drops).

Risk, compliance, and platform relationships

2026 regulators and platform teams expect clear disclosure and contracts for any high-frequency data extraction. When possible, prefer partner APIs. When scraping remains necessary, keep an open channel with platform operators and adopt permissions-first approaches to avoid legal friction. Also look to adjacent industries for custody and security playbooks — institutional platforms have guidance on secure custody and integrations that inform how to protect valuable datasets (How Institutional Custody Platforms Matured by 2026).

Future predictions

- Increased adoption of signed provenance for scraped records, driven by demands from ML teams and auditors.

- Edge headless networks will replace single-region farms for latency-sensitive feeds.

- More platforms will ship structured change feeds, making scraping a fallback rather than the default.

Get started checklist

- Audit current scrapers for single points of failure and legal risk.

- Introduce field-level validation and provenance metadata in outputs.

- Pilot an edge headless instance for one domain and measure latency improvements.

- Document governance: who can request data, retention, and redaction policies.

- Train the product team on observability dashboards and SLOs.

Final word: Web scraping in 2026 is a production discipline that sits at the intersection of engineering, law, and product. The teams that win will be those that balance high-quality, low-latency delivery with transparent governance and strong relationships with platforms.

Related Reading

- From Graphic Novels to Global IP: How Creators Can Turn Stories into Transmedia Franchises

- Security Alert: Expect Phishing and Scams After High‑Profile Events — Lessons from Saylor and Rushdie Headlines

- Govee RGBIC Smart Lamp for Streams: Atmosphere on a Budget

- Underfoot Predators: How Genlisea’s Buried Traps Work

- Backup Best Practices When Letting AI Touch Your Media Collection

Related Topics

Unknown

Contributor

Senior editor and content strategist. Writing about technology, design, and the future of digital media. Follow along for deep dives into the industry's moving parts.

Up Next

More stories handpicked for you

Quality Metrics for Scraped Data Feeding Tabular Models: What Engineers Should Track

Rapid Prototyping: Build a Micro-App that Scrapes Restaurant Picks from Group Chats

Comparing OLAP Options for Scraped Datasets: ClickHouse, Snowflake and BigQuery for Practitioners

Implementing Consent and Cookie Handling in Scrapers for GDPR Compliance

From Scraped Reviews to Business Signals: Building a Local Market Health Dashboard

From Our Network

Trending stories across our publication group

Privacy-First Browsers: How Local AI in the Browser Changes Data Protection

How Windows admins can diagnose and fix the 'Fail To Shut Down' Windows Update bug

From Chrome Extension to Local AI Extension: A Migration Playbook in TypeScript

From Bug to Bounty: Building a Secure, Developer-Friendly Bug Bounty Program for Games

A Practical Migration Plan: Moving Analytics from Snowflake to ClickHouse