Vertical Video and Its Impact on Data Scraping Practices

How vertical video changes scraping: format, manifests, edge strategies, tooling, and compliance for reliable media extraction.

Vertical Video and Its Impact on Data Scraping Practices

Vertical video has moved from a mobile-first novelty to the dominant media format on platforms like TikTok, Instagram Reels, and YouTube Shorts. For engineers and platform teams building data extraction and media analysis pipelines, that shift changes everything: from how you detect and download media to how you architect edge agents, transcode at scale, and stay compliant with evolving anti-bot defenses. This guide dives deep into the technical, architectural, and operational implications of vertical video for web scraping, media extraction, and downstream analysis.

1. Why Vertical Video Matters for Scrapers

1.1 Rapid format adoption and volume

Short-form vertical video has accelerated content churn and distribution velocity. Platforms prioritize vertical formats in feeds and recommendation engines, producing an explosion of small media assets — often dozens of short videos per user per day. That volume changes quotas, storage patterns, and retention strategies for scraping systems. For background on how creators and community commerce scale, see our analysis of Micro‑Drops, Preorder Kits and Community Commerce.

1.2 New metadata and UX signals

Vertical content often carries platform-specific metadata (e.g., music track IDs, aspect-ratio tags, sticker overlays) and ephemeral signals (view counts, completion rates) that matter for analytics. Scrapers must adapt to capture both binary media and these new structured signals and interpret them for ML models and business logic.

1.3 Creator workflows and production techniques

Producers build vertical-first workflows: native vertical framing, text overlay timing, and audio-first mixing. If your scraping target is creator analytics or ad measurement, consider reading creative playbooks like Create a Microdrama Walking Series and the Micro‑Documentaries playbook to understand production cues that become useful labels for ML.

2. Technical Differences: Vertical vs Horizontal Media

2.1 Aspect ratios, codecs, and container nuances

Vertical videos commonly use 9:16 or 4:5 aspect ratios; containers include MP4, fragmented MP4 (fMP4), HLS/DASH segmented streams, and platform-specific wrappers. Many scrapers assume standard 16:9 assets — that assumption breaks when downstream media processors (thumbnailers, OCR) expect centered content.

2.2 Streaming manifests and segment handling

Platforms frequently serve vertical short-form videos through HLS/DASH manifests with short segments (1–3s). Scrapers that download only a single initial segment risk incomplete captures; instead, implement manifest parsing and segment concatenation with tools like FFmpeg. Headless orchestration can help capture playback headers and dynamic token exchanges — see our guide to Headless Scraper Orchestration in 2026 for patterns to manage ephemeral manifests.

2.3 Watermarks, overlays, and composited layers

Vertical platforms often apply dynamic overlays (ads, music credits, watermarks). Decide whether you need raw media, composited final renders, or both. For many analytics workloads, extracting visible overlays and then stripping them for feature extraction produces the best results.

3. Challenges for Data Extraction from Vertical Video

3.1 Detecting orientation reliably

Orientation detection is trivial if metadata is available, but many platforms re-encode or strip metadata. Implement a fallback that samples frames (first, middle, last) and infers orientation via width/height comparison and a quick edge-detection pass that verifies subject placement.

3.2 Dealing with adaptive streaming and tokenized endpoints

Short-form platforms use expiring tokens and server-side rate-limits. Use headless browser sessions to initiate legitimate playback then capture the network requests for the actual media endpoints. For resilient orchestration across many targets, review patterns in Headless Scraper Orchestration in 2026 and hardening advice for agents from Autonomous Desktop Agents: Security Threat Model.

3.3 Anti-bot defenses and AI blocking

Platforms are improving bot detection and even AI-based fingerprinting. When bots are blocked or throttled, adaptive strategies (respectful backoff, residential proxies, and randomized client behaviors) are needed. For a broader take on platform-side AI blocking, read What to Expect When AI Bots Block Your Content and the implications for content creators in Blocking AI Crawlers: What It Means for Avatar and Content Creators.

4. Architecture Patterns That Matter

4.1 Edge-first ingestion

Because vertical video is latency-sensitive (streams, ephemeral tokens), extract close to the consumer edge when possible. Edge agents can fetch manifests and assemble segments quickly, reducing failed downloads. See guidance on edge-first strategies in Edge‑First Onboard Connectivity for Bus Fleets and the competitive advantages discussed in Low‑Latency Edge price feeds.

4.2 Centralized processing vs distributed transcode

Centralized transcoding simplifies quality control but increases egress and latency. Consider a hybrid approach: edge agents perform a fast-lossless concatenation and simple validation; central workers transcode for analysis and long-term storage.

4.3 Headless orchestration layered with agent renewal

Manage thousands of authenticated headless browser sessions with an orchestration layer that can refresh agents, rotate credentials, and route through appropriate proxies. The orchestration patterns we recommend align with approaches in Headless Scraper Orchestration in 2026.

5. Tools, APIs, and Libraries: What to Choose

5.1 Headless browsers and mobile emulation

Playwright and Puppeteer remain the most developer-friendly for mobile emulation; they allow you to capture the real network requests the client uses. For long-run scale, invest in an orchestration layer that can run these agents in ephemeral containers and reclaim resources quickly.

5.2 Media processing and feature extraction

FFmpeg is the de-facto tool for frame extraction, concatenation, and format normalization. For feature extraction (faces, OCR, audio fingerprints), use optimized inference runners at the edge and a central GPU pool for heavy batch work. If you need translation QA for captions and speech-to-text at scale, our recommended pipeline patterns are described in AI-augmented Translation QA Pipeline.

5.3 Off-the-shelf ingestion services vs custom crawlers

Third-party media ingestion APIs speed time-to-value but can be costly and opaque. For teams that need deep metadata and control, a customized stack (headless capture + manifest parser + FFmpeg) is best. For proof-of-concept or commercial extraction, combining both approaches often wins.

Pro Tip: For high-throughput vertical video ingestion, prioritize manifest parsing and segment assembly over naive full-page downloads — you’ll reduce bandwidth by 40–70% on many platforms.

6. Comparison Table: Extraction Approaches for Vertical Video

The table below compares common approaches. Use it to evaluate tradeoffs for cost, resilience, and fidelity.

| Approach | Best for | Pros | Cons | Estimated Cost |

|---|---|---|---|---|

| Manifest + segment assembly | Streaming platforms, low bandwidth | Efficient, accurate, lower egress | Requires manifest parsing & token handling | Low-Medium |

| Headless browser capture | Tokenized endpoints & dynamic clients | Mimics real client, captures dynamic flows | Resource heavy, needs orchestration | Medium-High |

| HTTP API (official, if available) | Institutional partners with access | Stable, legal, full metadata | Requires partner access / costs | Variable (often subscription) |

| Third-party ingestion services | Fast POC, media license workflows | Turnkey, scales quickly | Opaque processing & recurring fees | Medium-High |

| Mobile-device farm (real devices) | Anti-bot heavy platforms | Highest fidelity, evades many heuristics | Very expensive, complex ops | High |

7. Proxying, Anti-Bot and Resilience

7.1 Respectful rate limiting and politeness

Before scaling, implement rate limiting per origin and adaptive backoff. Scrapers that mimic realistic user behavior and respect robots.txt reduce long-term bans. When platforms explicitly block crawlers, analyze the impact in the wider creator and platform context with readings like What to Expect When AI Bots Block Your Content and Blocking AI Crawlers: What It Means for Avatar and Content Creators.

7.2 Proxy selection and rotation strategy

Residential proxies can provide the best success rate but come with cost and compliance concerns. Build a tiered proxy pool: cheap datacenter for low-value targets, premium residential for high-value or aggressive anti-bot sites.

7.3 Signal hygiene and fingerprinting countermeasures

Use controlled, audited client environments to avoid unexpected fingerprints. For higher security teams, follow the threat-model hardening checklist in Autonomous Desktop Agents: Security Threat Model.

8. Media Analysis: From Frames to Signals

8.1 Frame sampling strategies

Short-form vertical videos require different sampling strategies: sample more frequently for fast cuts, and align sampling to audio beats if you care about caption alignment. Frame-level metadata (face bounding boxes, text overlays) should be timestamped to enable sequence models.

8.2 Captioning, ASR and translation pipelines

Speech-to-text and caption alignment are core for discoverability and translation. Our practical pipeline recommendations for newsroom-grade speed and quality are in AI-augmented Translation QA Pipeline, which you can adapt to short vertical clips.

8.3 ML features specific to vertical formats

Vertical-first features include gaze distribution (where overlays sit), top-to-bottom text gradients, and music attribution. Train models to treat vertical framing as a distinct class because typical horizontal-trained models underperform on composition-dependent tasks.

9. Real-World Case Studies and Hardware Considerations

9.1 Field capture and edge AI

For on-location capture and immediate analysis, edge hardware and camera integrations are valuable. The design choices in portable setups are similar to those covered in our Modular Transit Duffel — Field Notes on Camera Integration and the portable field kits used in heritage projects in Field Case Study: Capturing Authentic Vouches.

9.2 Home/mini-server processing for small teams

Small teams can process vertical video on compact hardware. The Mac mini M4 is a cost-effective option for a home media server and local transcoding; see benchmarks and configuration tips in Mac mini M4 as a Home Media Server.

9.3 Pop-up workflows and in-person capture events

Events and pop-ups produce bursts of vertical content that require temporary ingestion capacity and offline sync strategies. Holiday market and pop-up vendor tech reviews like Holiday Market Tech Review 2026 illustrate practical tradeoffs for temporary setups.

10. Compliance, Ethics and Creator Relations

10.1 Legal considerations and platform policies

Content licensing, API Terms of Service, and local privacy laws determine how much you can store, analyze, or expose. When platforms tighten AI-related protections, you must adapt or seek official data partnerships. For discussion on how creator communities react to blocking, see What to Expect When AI Bots Block Your Content.

10.2 Ethical design: consent and attribution

When scraping creator content, prioritize attribution and rate-limit data exposure. Where possible, filter out content from creators who explicitly opt out of data harvesting and follow takedown procedures proactively.

10.3 Communication with creator ecosystems

Partnering with creators and platforms reduces friction. Creator playbooks such as the Advanced Playbook for Urdu Creators can inspire respectful data-use practices and improve quality signals for your models.

11. Implementation Checklist and Sample Pipeline

11.1 Minimal viable pipeline (MVP)

Build an MVP that proves accuracy before scaling: 1) Headless browser to authenticate and capture manifest URLs, 2) Manifest parser and segment assembler, 3) FFmpeg concatenation and thumbnail extraction, 4) ASR + OCR + metadata enrichment, 5) Store raw and normalized assets in a time-series or object store with lifecycle policies.

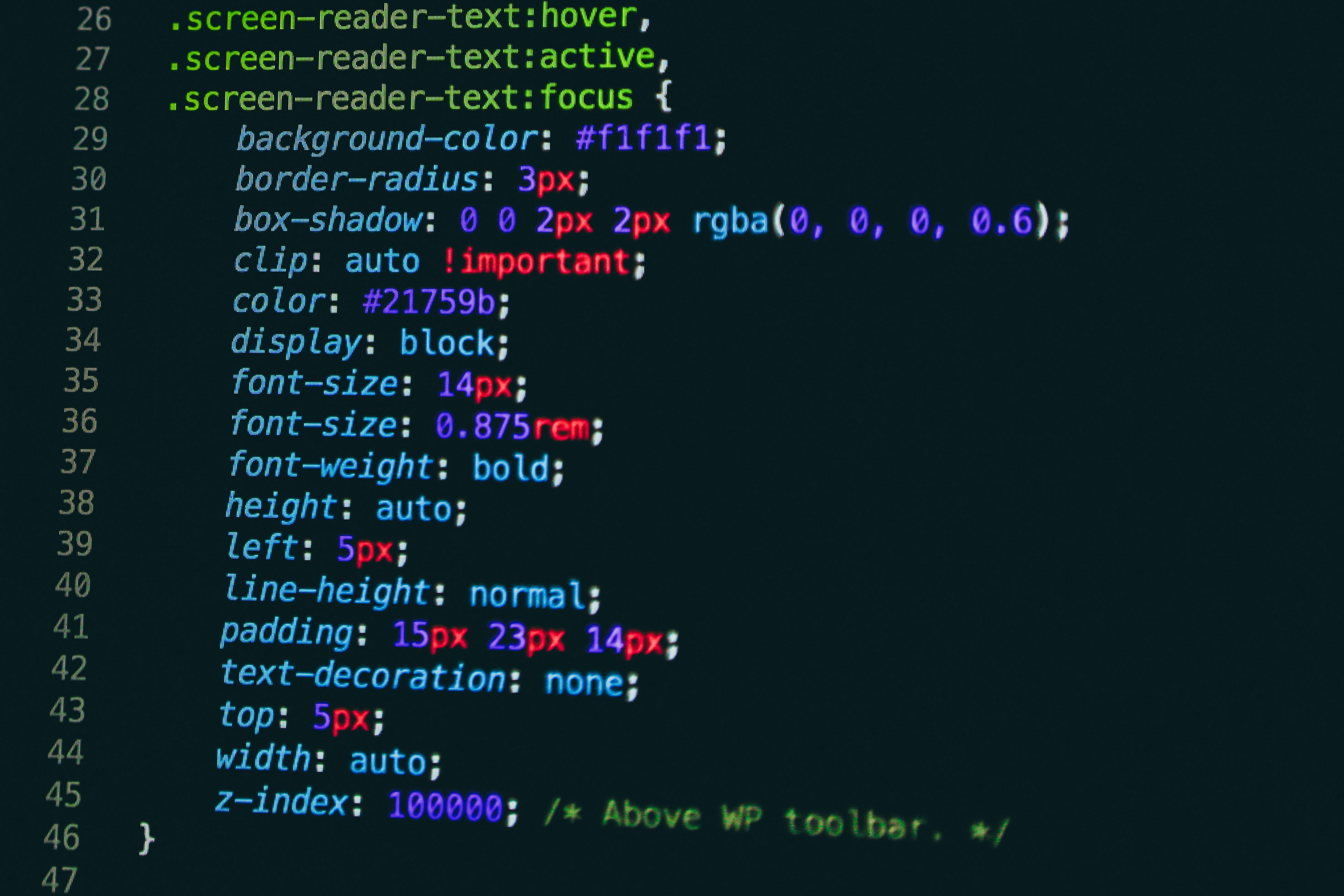

11.2 Example Playwright snippet (conceptual)

// Conceptual: launch mobile emulation, start playback, capture network

const { chromium } = require('playwright');

(async () => {

const browser = await chromium.launch({ headless: true });

const context = await browser.newContext({ viewport: { width: 360, height: 800 }, userAgent: 'Mobile UA' });

const page = await context.newPage();

page.on('request', req => { if (req.resourceType() === 'media') console.log(req.url()); });

await page.goto('https://example.com/vertical-page');

await page.click('.play-button');

// capture subsequent media manifest URLs from requests

await browser.close();

})();

11.3 Operational runbook items

Document retry logic, token refresh cadence, error classification, and data retention rules. Practice incident scenarios like mass token expiry and rapid platform policy changes — this kind of playbook is essential for resilient operations, similar to runbooks used in transit and edge architectures described in Edge‑First Onboard Connectivity for Bus Fleets.

FAQ — Common questions about vertical video scraping

Q1: Is scraping vertical videos legally safe?

A1: It depends. Scraping publicly accessible content may still violate Terms of Service or copyright law. Always consult legal counsel and prefer official APIs or partnerships when possible.

Q2: How do I handle expiring manifest tokens?

A2: Use headless sessions to obtain fresh tokens and cache manifests only for their valid lifetime. Implement parallel token refresh and backoff to avoid generating suspicious traffic.

Q3: Should I capture raw segments or rendered final video?

A3: Capture both if possible. Raw segments preserve original quality and metadata; rendered final video shows overlays and user-facing composition. Which you store depends on compliance and storage costs.

Q4: What are cost-effective ways to transcode many short videos?

A4: Use spot/GPU instances for batch transcodes, push lightweight tasks to edge nodes (e.g., thumbnailing), and centralize heavy inference. For local teams, a machine like the Mac mini M4 as a Home Media Server can be a good short-term solution.

Q5: How do I maintain good relations with creators?

A5: Be transparent about data use, provide attribution, offer opt-out mechanisms, and consider monetization-sharing where relevant. Study creator-focused materials like Advanced Playbook for Urdu Creators to build respectful integrations.

12. Buying Guide: Choosing the Right Stack

12.1 When to buy vs build

If time-to-market and compliance are priorities, buying an ingestion service may be preferable. Build only when you need deep metadata, custom anti-bot strategies, or on-premise control.

12.2 Key vendor evaluation criteria

Evaluate vendors for: manifest handling, token refresh support, orientation-aware processing, pricing model (per-video vs throughput), API stability, and SLAs for data freshness. Consider the operational patterns and trades discussed in Headless Scraper Orchestration in 2026 when comparing orchestration capabilities.

12.3 Recommended starter stack

Starter stack (balanced cost and control): Playwright headless agents in Kubernetes, Redis for short-lived token caching, an S3-compatible object store with lifecycle policies, FFmpeg workers, and a lightweight ML inference tier for captions and OCR. For edge or seasonal pop-ups, borrow tooling and lessons from the field reviews at Holiday Market Tech Review 2026 and hardware recommendations in Modular Transit Duffel — Field Notes on Camera Integration.

Conclusion

Vertical video has reshaped content creation and consumption patterns. For scraping and media analysis teams, the rise of vertical formats requires rethinking detection, ingestion, and processing pipelines — from manifest-aware downloaders and edge-first agents to orientation-aware ML models and respectful anti-bot strategies. Use headless orchestration patterns, adopt hybrid edge/central processing, and prioritize creator ethics and compliance. For extra reading on adjacent operational themes — from field kits to creator playbooks — explore the resources we referenced throughout this guide, including Headless Scraper Orchestration in 2026, Autonomous Desktop Agents: Security Threat Model, and practical field studies like Field Case Study: Capturing Authentic Vouches.

Related Reading

- VR Therapy in 2026: From Exposure Tools to Immersive Calm — Platforms Reviewed - Explores platform reviews and immersive media trends that intersect with video formats.

- From Sample Pack to Sell-Out: Advanced Paper & Packaging Strategies for Pop‑Ups in 2026 - Operational insights for pop-up events producing vertical content.

- The Beginner’s Guide to Brewing Better Coffee While Traveling - A lighter operational guide on portable setups and small-team workflows.

- Crafting Ceramics with a Cultural Touch: Explore Beyond the Surface - Creative production techniques with parallels to short-form content.

- Booking for Short‑Form Travel in 2026: Advanced Fare Strategies, Availability Signals, and Upsells - Insights into short-form travel demand and content patterns.

Related Topics

Avery K. Morgan

Senior Editor & SEO Content Strategist, scraper.page

Senior editor and content strategist. Writing about technology, design, and the future of digital media. Follow along for deep dives into the industry's moving parts.

Up Next

More stories handpicked for you

The Future of YouTube SEO: Scraping Techniques to Boost Video Engagement

The Evolution of Web Scraping in 2026: Ethics, Real-Time APIs, and Headless Strategies

Field Review: Tiny Studio Stack for Mobile Scraping Ops — Pocket Power & Portable Capture (2026)

From Our Network

Trending stories across our publication group

RISC-V + Nvidia GPUs: System-Level Architecture for AI Datacenters Using NVLink Fusion

The Next Generation of Smart Calendar Applications: Tools for Developers